P3D A B 4 C D 0 1 E 1 F 3. We know from Linear Algebra that if v is an eigenvector then so is cv for any constant c 6 0.

Solved Find The Vector Of Stable Probabilities For The Markov Chain With This Transition Matrix P I A B Y3 3 C 7 D E Ig F Y7 G 0 1 H Y 2 2

Stable vector of probabilities.

. Find the vector of stable probabilities for the Markov chain whose transition matrix is 6 4 0 0 7 3 1 0 9. Modified 9 years 10 months ago. Find the stable vector of a Markov matrix.

128VP V Ve 1 where e 111 is a column vector with all its elements equal to unity. For each operation calculator writes a step-by-step easy to understand explanation on how the work has been done. -04 x 03 y 0.

X c 1234 prob c 01020304 Total sample size n 20 result rep x round. Here is my work for this problem. It is easy to see that if we set then So the vector is a steady state vector of the matrix above.

In mathematics and statistics a probability vector or stochastic vector is a vector with non-negative entries that add up to one. Then you can create random permutations of the resulting vector. A stochastic matrix is a square matrix whose columns are probability vectors.

0 2 0 6. So if the populations of the city and the suburbs are given by the vector after one year the proportions remain the same though the people may move between the. If we define the limiting-state probability vector π π1 π2 πN then from 1228 we have that π πP and 1229aπ j k π kp kj 1229b1 j π j where the last equation is due to the law of total probability.

A Markov chain is a sequence of probability vectors x 0x 1x. Find the vector of stable probabilities for the Markov chain whose transition matrix is W 0 1 0 4 3 3 0 1 0. Calculates the matrix-vector product.

Calculate the vector of state probabilities for period n1. 1671 Given the following vector of state probabilities and the accompanying matrix of transition probabilities. Probability of drawing a blue and then black marble using the probabilities calculated above.

Solution for Find the vector of stable probabilities for the Markov chain whose transition matrix is 06 03 01 1 1 W. Create a Stable Distribution Object Using Specified Parameters Create a stable distribution object by specifying the parameter values alpha 05 beta 0 gam 1 and delta 0. Pd makedist Stable alpha 05 beta 0 gam 1 delta 0.

Get step-by-step solutions from expert tutors as fast as 15-30 minutes. Deever 1999 Otterbein College Mathematics of Decision Making Programs v 65 Page Next State Clear Calculate Steady State Page Startup Check Rows Normalize Rows Page Format Control OK Cancel 3 Number of decimal places 28 11 Column width 130 characters Page 200 000 200 000 1300 1100 800. A Matrix and a vector can be multiplied only if the number of columns of the matrix and the the dimension of the vector have the same size.

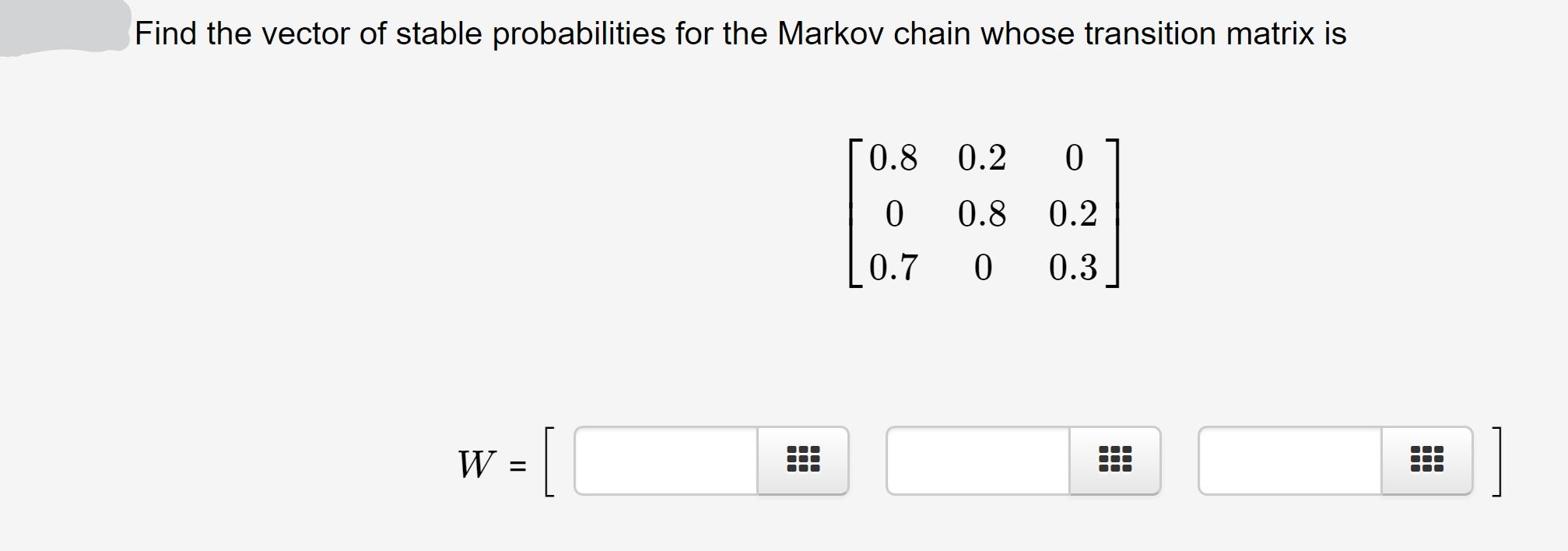

Probability vector in stable state. 08 0 4. The stable vector is the probability row vector w such that wp w.

Find the vector of stable probabilities for the Markov chain whose transition matrix is 6 4 0 0 7 3 1 0 9. Home Linear Algebra Matrix Operation. For a finite irreducible Markov chain with TPM P an invariant measure exists and is uniquethat is there is a unique probability vector V such that.

By signing up youll. Th power of probability matrix. Calculator for finite Markov chain by FUKUDA Hiroshi 20041012 Input probability matrix P P ij transition probability from i to j.

Matrix A a ij vector x x j matrix-vector product. Viewed 660 times 0 Im trying to make a program in scilab hopefully the same applies to matlab to get the time where a stable vector is found I mean after making several times the product vector and matrix the result will became. Calculate the mean of the distribution.

Since you want the number of repetitions of each value to be deterministic rather than random use rep instead of sample to repeat each value in proportion to its probability in prob. Takes space separated input. P A B P A P BA 310 79 02333 Union of A and B In probability the union of events P A U B essentially involves the condition where any or all of the events being considered occur shown in the Venn diagram below.

Solution for Find the vector of stable probabilities for the Markov chain with this transition matrix. These two equations give a. Probability vector Markov chains stochastic matrix Section 49 Applications to Markov Chains A Probability vector is a vector with nonnegative entries that add up to 1.

Calculator for Finite Markov Chain Stationary Distribution Riya Danait 2020 Input probability matrix P P ij transition probability from i to j. To find the long-term probabilities of sunny and cloudy days we must find the eigenvector of A associated with the eigenvalue λ 1. Enter transition matrix and initial state vector.

You can add subtract find length find vector projections find dot and cross product of two vectors. We next discuss the limiting behavior of chains. Probability vector in stable state.

Ask Question Asked 9 years 10 months ago. Markov Process Calculator v. The probability vector w is the eigenvector that is also a probability vector.

The positions indices of a probability vector represent the possible outcomes of a discrete random variable and the vector gives us the probability mass function of that random variable which is the standard way of characterizing a discrete. 031 069 260 Markov Analysis l CHAPTER 16 1670 Given the following matrix of transition probabilities find the equilibrium state. Matrix-Vector product Calculator.

Vector Calculator Vector calculator This calculator performs all vector operations in two and three dimensional space. Your first 5 questions are on us. Matrix and Vector Calculator.

State Vectors Regular Markov Chains Youtube

Solved Find The Vector Of Stable Probabilities For The Chegg Com

Finding The Stable Distribution Youtube

Prob Stats Markov Chains 15 Of 38 How To Find A Stable 3x3 Matrix Youtube

Solved Find The Vector Of Stable Probabilities For The Chegg Com

Answered Find The Vector Of Stable Probabilities Bartleby

Prob Stats Markov Chains 13 Of 38 How To Find A Stable 2x2 Matrix Ex 2 Youtube

Prob Stats Markov Chains 15 Of 38 How To Find A Stable 3x3 Matrix Youtube

0 comments

Post a Comment